关于watermark的概念请参考zoned page frame allocator--3(对watermark的理解)

本文主要根据一个实例来加深理解

1. zoneinfo的信息

通过/proc/zoneinfo节点可以查看zone的相关信息,下面只截取了一部分,该开发平台只有1个node,也只有一直zone类型,即zone DMA32;

Node 0, zone DMA32

......

pages free 56388

min 1139

low 7295

high 7621

spanned 521952

present 345696

managed 326626

protection: (0, 0, 0)

nr_free_pages 56388

......

这里的min、low、high的值是怎么计算出来的的呢?主要是依据min_free_kbytes的值,下面先分析下这个值是怎么计算出来的。

2. min_free_kbytes值的计算

根据文档Documentation/sysctl/vm.txt,

==============================================================

min_free_kbytes:

This is used to force the Linux VM to keep a minimum number

of kilobytes free. The VM uses this number to compute a

watermark[WMARK_MIN] value for each lowmem zone in the system.

Each lowmem zone gets a number of reserved free pages based

proportionally on its size.

Some minimal amount of memory is needed to satisfy PF_MEMALLOC

allocations; if you set this to lower than 1024KB, your system will

become subtly broken, and prone to deadlock under high loads.

Setting this too high will OOM your machine instantly.

=============================================================从上面的话得出了以下几点:

min_free_kbyes代表的是系统保留空闲内存的最低限watermark[WMARK_MIN]的值是通过min_free_kbytes计算出来的

系统中min_free_kbytes的值可以通过下面的节点查看。可以看到,这个4556/4=1139,就是上面min的值(因为我们这里只有一个zone DMA32)。

console:/ # cat /proc/sys/vm/min_free_kbytes

4556下面的函数对每个zone做计算,将每个zone中超过high水位的值放到sum中。超过高水位的页数计算方法是:managed_pages减去watermark[WMARK_HIGH], 这样就可以获取到系统中各个zone超过高水位页的总和

其中,high_wmark_pages(zone)获取的是watermark[WMARK_HIGH]的值,这里有个让我疑惑的地方是watermark[WMARK_HIGH]的值是根据watermark[WMARK_MIN]确定的,我们这里是为了计算watermark[WMARK_MIN],怎么会需要watermark[WMARK_HIGH]呢,在这里加个打印,发现在我的平台一开始获取的high值就是0,所以这里返回的就是managed_pages值,即326626

/**

* nr_free_zone_pages - count number of pages beyond high watermark

* @offset: The zone index of the highest zone

*

* nr_free_zone_pages() counts the number of counts pages which are beyond the

* high watermark within all zones at or below a given zone index. For each

* zone, the number of pages is calculated as:

*

* nr_free_zone_pages = managed_pages - high_pages

*/

static unsigned long nr_free_zone_pages(int offset)

{

struct zoneref *z;

struct zone *zone;

/* Just pick one node, since fallback list is circular */

unsigned long sum = 0;

struct zonelist *zonelist = node_zonelist(numa_node_id(), GFP_KERNEL);

for_each_zone_zonelist(zone, z, zonelist, offset) {

unsigned long size = zone->managed_pages;

unsigned long high = high_wmark_pages(zone);

if (size > high)

sum += size - high;

}

return sum;

}

/**

* nr_free_buffer_pages - count number of pages beyond high watermark

*

* nr_free_buffer_pages() counts the number of pages which are beyond the high

* watermark within ZONE_DMA and ZONE_NORMAL.

*/

unsigned long nr_free_buffer_pages(void)

{

return nr_free_zone_pages(gfp_zone(GFP_USER));

}

watermark的初始化是由下面的函数来完成的,将上面获取的值,即326626代入,可以算得在我们得平台上,min_free_kbytes得值为4572.与上面得4556大小基本相等。

/*

* Initialise min_free_kbytes.

*

* For small machines we want it small (128k min). For large machines

* we want it large (64MB max). But it is not linear, because network

* bandwidth does not increase linearly with machine size. We use

*

* min_free_kbytes = 4 * sqrt(lowmem_kbytes), for better accuracy:

* min_free_kbytes = sqrt(lowmem_kbytes * 16)

*

* which yields

*

* 16MB: 512k

* 32MB: 724k

* 64MB: 1024k

* 128MB: 1448k

* 256MB: 2048k

* 512MB: 2896k

* 1024MB: 4096k

* 2048MB: 5792k

* 4096MB: 8192k

* 8192MB: 11584k

* 16384MB: 16384k

*/

int __meminit init_per_zone_wmark_min(void)

{

unsigned long lowmem_kbytes;

int new_min_free_kbytes;

lowmem_kbytes = nr_free_buffer_pages() * (PAGE_SIZE >> 10);

new_min_free_kbytes = int_sqrt(lowmem_kbytes * 16);

if (new_min_free_kbytes > user_min_free_kbytes) {

min_free_kbytes = new_min_free_kbytes;

if (min_free_kbytes < 128)

min_free_kbytes = 128;

if (min_free_kbytes > 65536)

min_free_kbytes = 65536;

} else {

pr_warn("min_free_kbytes is not updated to %d because user defined value %d is preferred\n",

new_min_free_kbytes, user_min_free_kbytes);

}

// 建立各个zone得水位值

setup_per_zone_wmarks();

refresh_zone_stat_thresholds();

setup_per_zone_lowmem_reserve();

#ifdef CONFIG_NUMA

setup_min_unmapped_ratio();

setup_min_slab_ratio();

#endif

khugepaged_min_free_kbytes_update();

return 0;

}3. 建立各个zone得水位值

具体每个zone得水位值是由下面得函数确认的,

static void __setup_per_zone_wmarks(void)

{

// pages_min = 4556/4=1139

unsigned long pages_min = min_free_kbytes >> (PAGE_SHIFT - 10);

// extra_free_kbytes机制应该是Android得patch,看了下5.18得kernel,还不包含这个patch,本平台这个值大小为24300

// page_low=24300/4=6075

unsigned long pages_low = extra_free_kbytes >> (PAGE_SHIFT - 10);

unsigned long lowmem_pages = 0;

struct zone *zone;

unsigned long flags;

// 计算除了ZONE_HIGHMEM之外所有的managed_pages之和,即lowmem_pages=326626

/* Calculate total number of !ZONE_HIGHMEM pages */

for_each_zone(zone) {

if (!is_highmem(zone))

lowmem_pages += zone->managed_pages;

}

for_each_zone(zone) {

u64 min, low;

spin_lock_irqsave(&zone->lock, flags);

// min = 1139*326626=372027014

min = (u64)pages_min * zone->managed_pages;

// 取商,min = 1139,

do_div(min, lowmem_pages);

low = (u64)pages_low * zone->managed_pages;

// vm_total_pages的值就是nr_free_pagecache_pages()的返回值,打log发现这个值是340294

// 也不是managed_pages值

// 所以下面计算后low的值在我们的平台还是pages_low=6075*326626/340294=5830

do_div(low, vm_total_pages);

if (is_highmem(zone)) {

/*

* __GFP_HIGH and PF_MEMALLOC allocations usually don't

* need highmem pages, so cap pages_min to a small

* value here.

*

* The WMARK_HIGH-WMARK_LOW and (WMARK_LOW-WMARK_MIN)

* deltas control asynch page reclaim, and so should

* not be capped for highmem.

*/

unsigned long min_pages;

min_pages = zone->managed_pages / 1024;

min_pages = clamp(min_pages, SWAP_CLUSTER_MAX, 128UL);

zone->watermark[WMARK_MIN] = min_pages;

} else {

/*

* If it's a lowmem zone, reserve a number of pages

* proportionate to the zone's size.

*/

// 在我们的平台,即走到这里,即求得了zone->watermark[WMARK_MIN]=1139

zone->watermark[WMARK_MIN] = min;

}

/*

* Set the kswapd watermarks distance according to the

* scale factor in proportion to available memory, but

* ensure a minimum size on small systems.

*/

min = max_t(284,326),即min=326.

min = max_t(u64, min >> 2,

mult_frac(zone->managed_pages,

watermark_scale_factor, 10000));

// Android打的patch,low的值在这里起了作用

// zone->watermark[WMARK_LOW]=1139+5830+326=7286

zone->watermark[WMARK_LOW] = min_wmark_pages(zone) +

low + min;

// watermark[WMARK_HIGH]即比zone->watermark[WMARK_LOW]多了个min的值

zone->watermark[WMARK_HIGH] = min_wmark_pages(zone) +

low + min * 2;

spin_unlock_irqrestore(&zone->lock, flags);

}

/* update totalreserve_pages */

calculate_totalreserve_pages();

}

/**

* setup_per_zone_wmarks - called when min_free_kbytes changes

* or when memory is hot-{added|removed}

*

* Ensures that the watermark[min,low,high] values for each zone are set

* correctly with respect to min_free_kbytes.

*/

void setup_per_zone_wmarks(void)

{

static DEFINE_SPINLOCK(lock);

spin_lock(&lock);

__setup_per_zone_wmarks();

spin_unlock(&lock);

}

本平台得extra_free_kbytes值为24300

console:/ # cat /proc/sys/vm/extra_free_kbytes

24300

本平台watermark_scale_factor的值为10

console:/ # cat /proc/sys/vm/watermark_scale_factor

10Android为什么要增加extra_free_kbytes的值呢,主要目的是为了拉开watermark[WMARK_LOW]和watermark[WMARK_MIN]的距离。

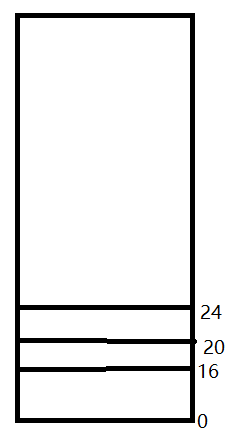

下面是我从本地的ubuntu开发机截取的zoneinfo信息,可以看到,min和low的差值并不大,其中low是min的1.25倍,high是min的1.5倍。

Node 0, zone DMA

......

pages free 3977

min 16

low 20

high 24

spanned 4095

present 3998

managed 3977

protection: (0, 3116, 15719, 15719, 15719)

......

Node 0, zone DMA32

pages free 417377

min 3346

low 4182

high 5018

spanned 1044480

present 825004

managed 808543

protection: (0, 0, 12602, 12602, 12602)

......

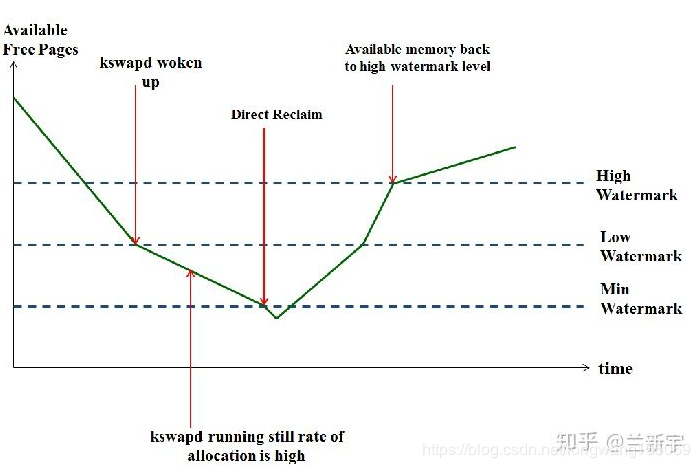

根据上图可知

- 我们分配页的第一次尝试是从LOW水位开始分配的,当所剩余的空闲页小于LOW水位的时候,就会唤醒kswapd内核线程进行内存回收

- 如果回收内存效果很显著,当空闲页大于HIGH水位的时候,则会停止kswapd内核线程回收

- 如果回收内存效果不明显,当空闲内存直接小于MIN水位的时候,则会进行直接的内存回收(Direct reclaim),这样空闲内存就会逐渐增大

- 当回收效果依然不明显的时候,则会启动OOM杀死进程

- kswapd周期回收机制

kswapd是linux中用于页面回收的内核线程。当空闲内存的值低于low时,内存面临着一定的压力,在这次分配结束后kswapd就会被唤醒,这时内核线程kswapd开始进行页面回收,当kswapd回收页面发现此时内存终于到达了high水位,那么系统认为内存已经不再紧张了,所以将会停止进一步的操作。要注意,在这种情况下,内存分配虽然会触发内存回收,但不存在被内存回收所阻塞的问题,两者的执行关系是异步的。这里所说的“空闲内存”其实是一个zone总的空闲内存减去其lowmem_reserve的值。对于kswapd来说,要回收多少内存才算完成任务呢?只要把空闲内存的大小恢复到high对应的watermark值就可以了,当然,这取决于当前空闲内存和high值之间的差距,差距越大,需要回收的内存也就越多。low可以被认为是一个警戒水位线,而high则是一个安全的水位线。 - 内存紧缺直接回收机制

如果内存达到或者低于min时,这时说明现在内存严重不足,会触发内核直接回收操作(direct reclaim),这是一种默认的操作,此时分配器将同步等待内存回收完成,再进行内存分配。还有一种特殊情况,如果内存分配的请求是带了PF_MEMALLOC标志位的,并且现在空闲内存的大小可以满足本次内存分配的需求,那么也将是先分配,再回收。

使用PF_MEMALLOC(PF表示per-process flag)相当于是忽略了watermark,因此它对应的内存分配的标志是ALLOC_NO_WATERMARk。能够获取"min"值以下的内存,也意味着该process有动用几乎所有内存的权力,因此它也对应GFP的标志__GFP_MEMALLOC。

if (gfp_mask & __GFP_MEMALLOC)

return ALLOC_NO_WATERMARKS;

if (alloc_flags & ALLOC_NO_WATERMARKS)

set_page_pfmemalloc(page);

可在内存严重短缺的时候,拥有不等待回收而强行分配内存的权力的进程其实可以想到kswapd,因为kswapd本身就是负责回收内存的,它只需要占用一小部分内存支撑其正常运行,就可以去回收更多的内存。

虽然kswapd是在low到min的这段区间被唤醒加入调度队列的,但当它真正执行的时候,空闲内存的值可能已经掉到min以下了。可见,min值存在得一个意义是保证像kswapd这样得特殊任务能够在需要得时候立刻获取所需得内存。

比如下面ubunut的水位,其中min=16, low=20, high=24

比如当前空闲内存是在LOW水位以及MIN以上,这时候后台会启动kswapd内核线程进行进程内存回收,假设这时候突然有个很大的进程需要很大的内存请求,这样一来kswapd回收速度赶不上分配速度,内存一下掉到了MIN水位,这样直接就触发了直接内存回收,直接回收很影响系统性能的。这样看来linux原生的代码涉及MIN-LOW之间的间隙太小,很容易导致进入直接回收的情况的。所以在android的版本上增加了一个变量extra_free_kbytes.

评论 (0)